Shopify Data Tech Stack

Explore what tech stack is used at Shopify to process 284 million peak requests per minute generating $11+ billions in sales.

Learn how Shopify handles hundreds of millions of peak requests per minute, powering billions in sales through a robust, scalable infrastructure. This overview shares the key tools, architectures, and innovations Shopify leverages for data ingestion, processing, storage, and analytics to support global commerce.

Metrics

284 million peak requests per minute to Shopify’s app servers during peak sales events, powering $11.5billion in sales over four days in 2024, supported by 99.9% uptime.

$4.6million in peak sales per minute and up to 967,000 requests per second during Black Friday and Cyber Monday.

Shopify’s infrastructure is 100% powered by Google Cloud, leveraging the same dependable network as Gmail, Search, and YouTube.

Kafka has handled 66 million messages per second at peak.

76k Spark jobs with 300 TB processed per day.

Airflow has 10,000 DAGs with 400 tasks running at a given moment and over 150,000 runs executed per day.

DBT has 100+ models with 400+ unit tests running on average under ~3min.

Content is based on multiple sources including Shopify Blog, GCP Blog and other public articles etc. You will find references to dive deep as you read.

Platform

Google Cloud Platform

Shopify operates fully on Google Cloud Platform, benefiting from GCP’s reliability, scalability, and security. The partnership enables Shopify to deliver seamless merchant experiences during surges like BFCM and holiday sales, with near-instant scaling to accommodate billions of app server requests. GCP’s advanced infrastructure is integral for Shopify’s uptime, performance, and integration of AI-powered solutions

📖Integration details: Shopify Partner Spotlight: Google Cloud

Messaging System

Kafka

Kafka is the backbone for Shopify’s messaging, providing real-time streaming and event-driven architecture vital for ecommerce scale. It supports order processing, inventory updates, and notifications, enabling high availability and reliable communications across distributed systems.

📖More on tech choices: Shopify Tech Stack - ByteByteGo

Processing

Beam/DataFlow

Shopify leverages Apache Beam running on DataFlow to orchestrate large data processing jobs in both batch and streaming modes. This enables scalable ETL workflows and near real-time analytics for customer events and operational metrics.

📖 Recommended Reading: How Shopify Improved Consumer Search Intent with ML

Spark (Starscream)

Shopify built Starscream, an internal PySpark-based data pipeline platform, to efficiently run tens of thousands of jobs processing hundreds of terabytes of data daily. It abstracts common complex patterns into reusable components.

It was designed to accelerate translation from SQL prototypes to scalable PySpark jobs, empowering teams to build robust data models quickly and at scale.

📖 More on Starscream: The Complex Data Models Behind Shopify's Tax Insights Feature

Trino

Trino serves as a query engine for interactive analytics, federating data across storage systems such as BigQuery. It excels at large-scale SQL workloads, offering low-latency access to Lakehouse for analysts.

📖 Read More: Shopify's Path to a Faster Trino Query Execution: Infrastructure

Orchestrator

Airflow

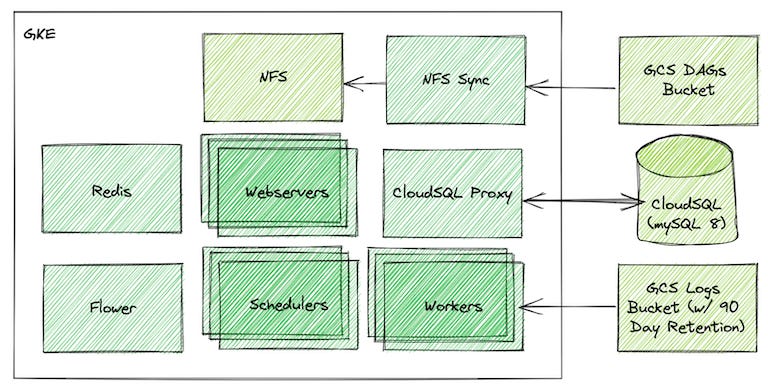

Shopify’s adoption of Apache Airflow delivers robust DAG-driven automation for ETL, model training, and data pipeline management. The team has shared scaling lessons and customizations that support thousands of workflows and seamless production deployments, essential for complex scheduling requirements as data volumes grow.

📖 Recommended Reading: Lessons Learned Scaling Apache Airflow

Warehouse

BigQuery

BigQuery is Shopify’s central data warehouse, powering analytics, reporting, and dashboarding for business teams and merchants. Its seamless scalability and rapid-query response underpin key features such as real-time sales insights, optimization reports, and recommendation engines, with production-grade data managed via DBT.

🎙️Recommended Podcast: Data Warehouse at Shopify

Transformation

DBT

Shopify relies heavily on DBT for scaling their transformation and unit testing used by 200+ Data Scientists across the company.

Along with Starscream, they also have a tool called Seamster, that allows users to skip the conversion to PySpark and build scalable pipelines using DBT and BigQuery.

📖More on Seamster: Production Grade Workflow with SQL Modelling

Below is a detailed video on how they perform unit testing at scale.

Lakehouse

Google Cloud Storage & Iceberg

Raw and staged data lands in GCS, forming the foundation for Shopify’s lake architecture. GCS works seamlessly with Iceberg to provide a Lakehouse architecture that is easily accessible by hundreds of downstream users and consumers.

🎥Recommended Video: Iceberg at Shopify

Data Store

Druid

Druid is adopted for high-performance, low-latency OLAP analytics. It serves operational dashboards and provides fast filtering, drill-down capabilities, and aggregated metrics for merchants and internal teams, supporting millions of queries daily, read more at Apache Druid.

Dashboard

Polaris-Viz

Polaris-viz is Shopify’s custom React visualization library, designed initially for both internal and external dashboards. While open source is deprecated but internally is still widely adopted.

📖 Background: React Library for Consistent Visualization

Related Content:

💬 Shopify’s tech stack relies on GCP and other core technologies for building a modern scalable architecture that can scale well under extreme demands for both online and offline processes.

Hey, great read as always. What if their AI solutions needed even crazier scale?

great read