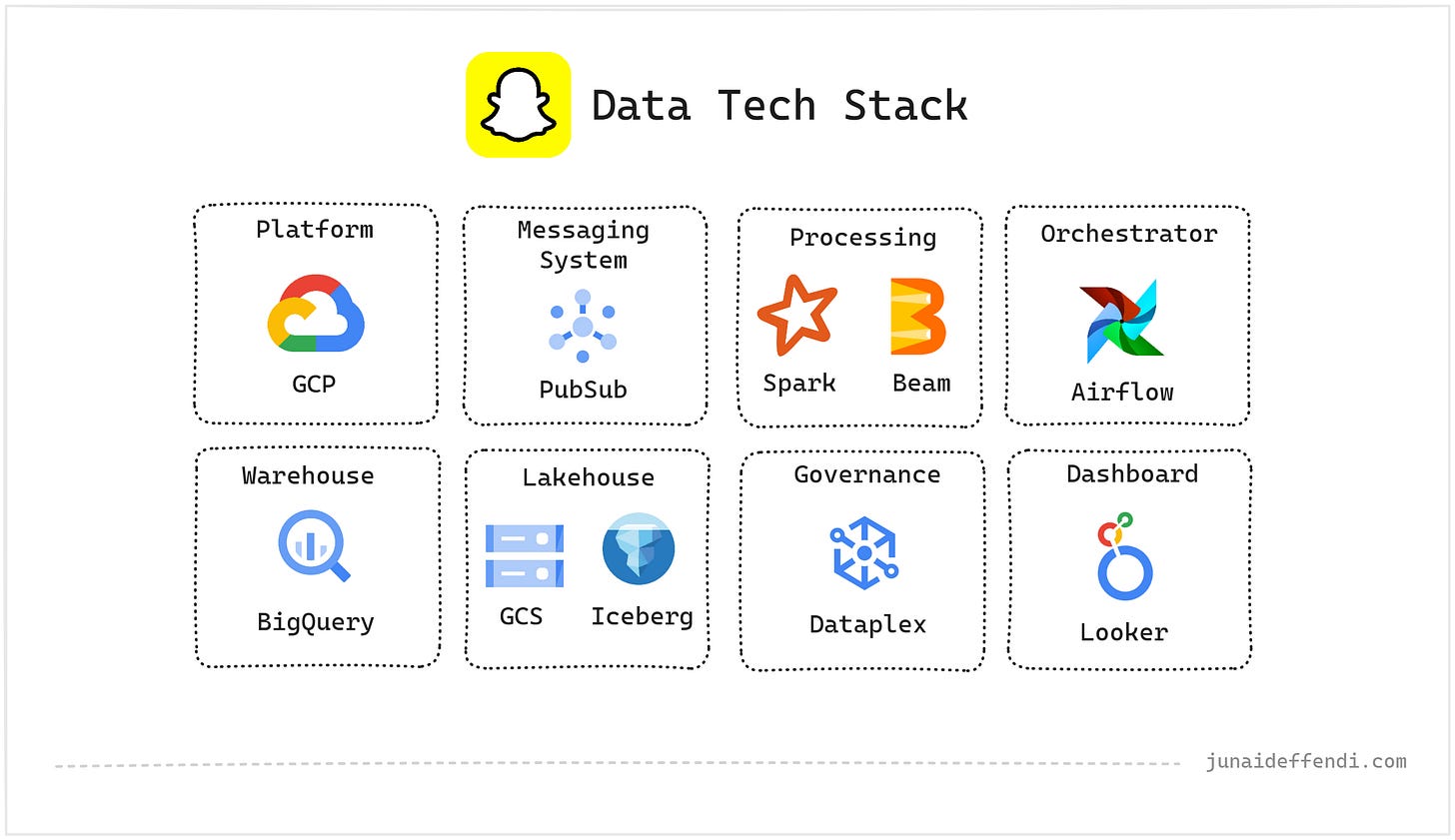

Snapchat Data Tech Stack

Learn how Snapchat ingests ~2 trillions of events per day using Google Cloud Platform.

Snapchat is a tech company that handles complex, large-scale challenges in the data space. Today, we will explore the tools and technologies Snapchat uses for data ingestion, transformation, governance, and more.

Metrics

Ingesting 4+ TB of data into BQ every day, source.

Ingesting 1.8 trillion events per day at peak, source.

Datawarehouse contains more than 200 PB of data in 30k GCS bucket, source.

Snapchat receives 5 billions Snaps per day, source.

Snapchat has 3,000 Airflow DAGS with 330,000 tasks, source.

Content is based on multiple sources including Snap Blog, Open Source websites, Job descriptions and other public articles etc. You will find references to dive deep as you read.

Platform

GCP

Snapchat leverages GCP as their platform for all data infra, processing and analytical needs. They have been a big user of variety of GCP services.

💡Snapchat’s multi-cloud architecture includes AWS; however, there is no publicly available information about its use for offline data processing.

Messaging System

PubSub

Real time events are ingested through GCP native PubSub service. The fully managed services scales seamlessly during peak hours and works well with the GCP Dataflow service.

Processing

Beam

Snapchat relies on GCP Dataflow, a fully managed Apache Beam service, to process data in both streaming and batch modes.

Spark

Snapchat extensively uses Spark (GCP DataProc) for both batch and stream processing, making it a core component of the feature generation architecture that powers its recommendation systems.

📖 Recommended Reading: Speed Up Feature Engineering

Orchestrator

Airflow

Snapchat has been using Airflow since 2016 and has faced numerous challenges over the years, which were addressed by upgrading to Airflow 2+.

As per 2024, Snap runs over 3,000 DAGs that execute more than 330,000 task instances daily, covering ETL, reporting/analytics, machine learning workloads, and more. Snap relies on 200+ custom operators to support a wide range of services and use cases, serving over 1,000 active Airflow users.

Warehouse

BigQuery

Snapchat heavily relies on BigQuery for its data warehousing needs. All event-driven data from various sources ends up in the warehouse.

BigQuery is used by many teams across different organizations for both ad hoc and scheduled workflows, orchestrated through Airflow.

Lakehouse

GCS & Iceberg

Alongside centralized data warehousing, Snapchat uses a lakehouse architecture by combining Google Cloud Storage (GCS) with Apache Iceberg. This allows them to efficiently access large datasets without duplication in BigQuery.

With BigLake integration, GCS data becomes directly accessible in BigQuery, giving users a native-like experience.

📖 Recommended Reading: Apache Iceberg support for BigLake

ML teams are among the primary users of Iceberg and BigQuery tables in their Bento Platform.

Governance

Dataplex

No surprise, as Snapchat is a GCP-heavy user, they also use GCP's Dataplex service for data management. It enables the creation of a logical data organization layer spanning more than 1,500 GCP projects, allowing them to manage and make decisions without the need to move data physically.

Dashboard

Looker

Looker plays a central role in enabling data-driven decisions across the company. Integrated with BigQuery, it empowers teams to self-serve their data needs.

Snapchat has made significant investments in Looker, partnering with GCP to upskill teams worldwide, as per this source.

Related Content:

Interesting Read:

💬 Snapchat primarily use GCP for Data Analytics and Platform, but with their recent adoption of AWS, there is likely some information that I am unaware of. Please let me know in the comments.