How Delta Lake Works

Understand how Delta Lake handles reads and writes using the transaction log, ensuring ACID guarantees through snapshot isolation and optimistic concurrency control.

Delta Lake is an open-source storage layer that brings ACID transactions, schema enforcement, and unified streaming + batch processing to cloud data lakes. Originally developed by Databricks, it was created to address data consistency and reliability issues in traditional data lakes.

Delta allows compute engines like Apache Spark, Trino, and Flink to access the same data reliably via a transaction log that records every change made to a table.

Architecture & Components

Delta Lake’s architecture centers around a small set of well-defined components:

Parquet Data Files: The actual table data is stored in columnar Parquet format within a directory.

_delta_log/: A transaction log directory containing a series of JSON and Parquet files that track all changes to the table. This log ensures ACID guarantees.

Table Metadata: Stored in the JSON files, includes schema, partitioning, and table properties (example shared later).

Checkpoint: Stored in the Parquet file, includes the optimized snapshot of table state.

Commit Protocol: Ensures atomic commits using optimistic concurrency control. Writers coordinate through exclusive commit file naming (e.g. 00001.json).

Readers/Writers: Clients read consistent snapshots by replaying the log, and writers create new log entries to record changes. Each transaction creates a new JSON file.

💡You can create a Delta table directly without the need of a catalog.

Internal Mechanics

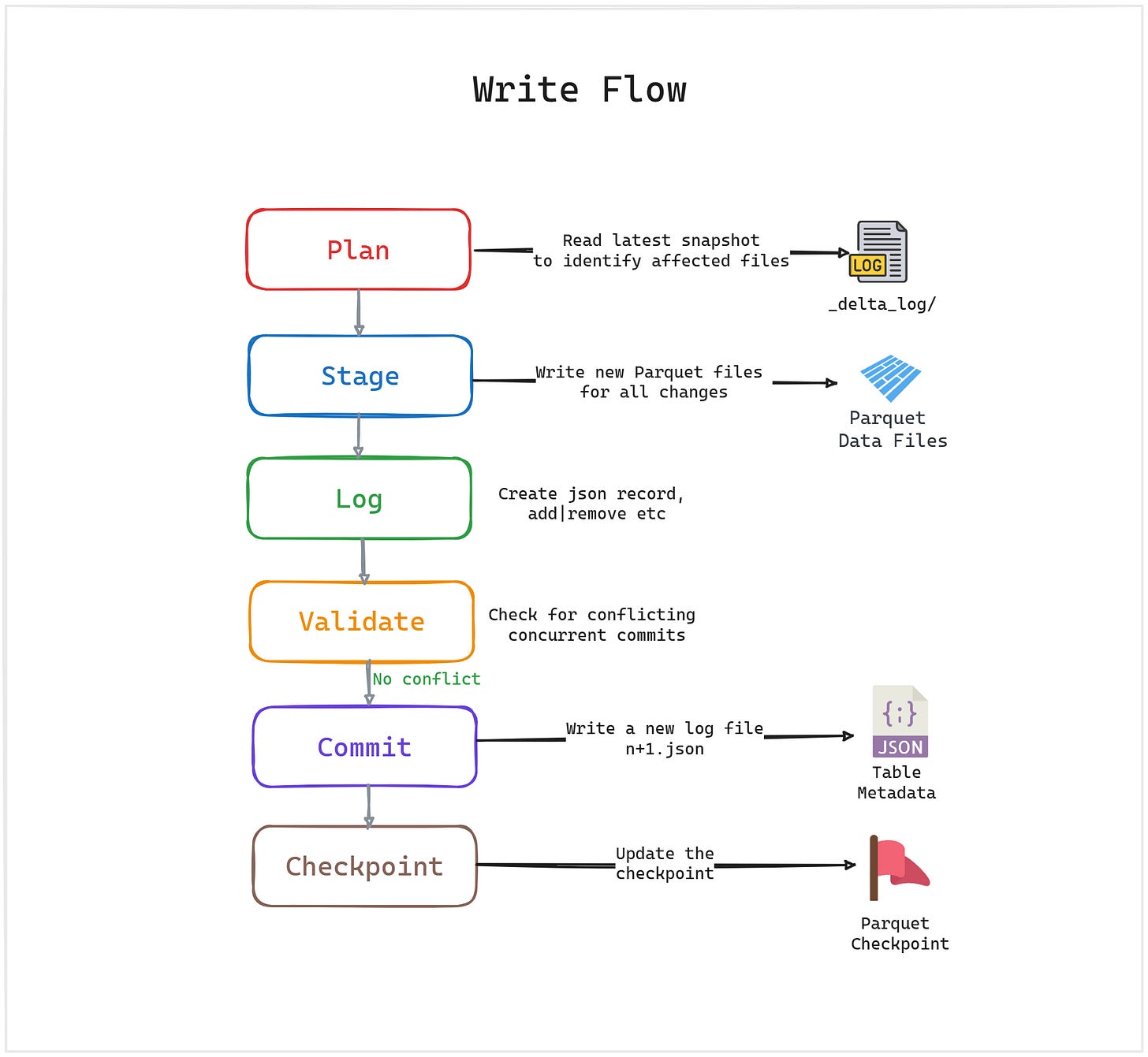

Write Flow

Plan: Read latest snapshot (if needed) to identify affected files.

Stage: Write new Parquet files for all changes.

Log: Create JSON "add" (and optional "remove"/"metadata") actions for each change.

{ "add": { "path": "part-00001-1234abcd.snappy.parquet", "partitionValues": { "year": "2024", "month": "06" }, "size": 345678, "modificationTime": 1686780000000, "dataChange": true, "stats": "{\"numRecords\": 50000, \"minValues\": {\"id\": 1}, \"maxValues\": {\"id\": 50000}}" } }Validate: Check for conflicting concurrent commits using optimistic concurrency.

Commit: If no conflict, write a new log file (e.g.,

00000123.json) to_delta_log/.Checkpoint: Periodically write Parquet checkpoints to speed up reads.

💡Writes use optimistic concurrency, if two writers try to commit overlapping changes, only one succeeds.

Read Flow

Load: Load the latest checkpoint (if available) and all subsequent JSON logs.

Reconstruct: Reconstruct the current table state (active files, schema, metadata).

Plan: Apply query filters and partition pruning based on metadata.

Read: Read the relevant Parquet files from storage.

Return: Return the final filtered and projected result to the user.

💡Snapshot isolation: Readers see a consistent snapshot, even as new writes occur.

References

Delta Lake: High-Performance ACID Table Storage over Cloud Object Stores: https://www.vldb.org/pvldb/vol13/p3411-armbrust.pdf

Delta Lake Docs: https://docs.delta.io/latest/

Lakehouse: A New Generation of Open Platforms that Unify Data Warehousing and Advanced Analytics: https://www.cidrdb.org/cidr2021/papers/cidr2021_paper17.pdf

I hope this article was helpful in understanding how the Delta Transaction log works. Please share your feedback and suggestions

Stay tuned for deep dives into Z-Ordering, Liquid Clustering, and advanced concurrency control in future posts.

Table formats were always a mystery for me. The is the most powerful and zero BS breakdown I read. Thanks!

I would love to see a comparaison with the DuckLake table format.