Understanding Text Corpus using Word2Vec (Skip Gram Model) - Tutorial

Understanding a text corpus is really hard for a computer considering the old learning styles, they just learn the things but are not familiar of how the words really work with respect to other words (independent from the context).

The recent paper introducing great techniques like the Word2Vec which let the computer do so many things using the text. For example, finding the relationship among the words with respect to the context in which they are used.

Word2Vec from its name is simply converting a word to a vector. It has two types the Skip Gram Model and the Continuous Bag of Words. The difference between these two is simple, the former predicts the neighbors around a particular word while the latter predict the word with the help of the neighbors. In this tutorial, we will do a skip gram model which is much efficient for small training datasets.

This type of learning is done using technique called unsupervised learning. In unsupervised learning the dataset has no labels, since this dataset contains huge amount of words it was difficult to have a labelled dataset, so we will label it ourselves using a machine learning concept known as unsupervised learning. The technique used here is almost similar to logistic regression. The purpose of logistic regression is to differentiate between the actual data and the noisy samples.

Lets now work this in tensorflow, the following code download the file from , the downloaded file is a zip file containing a English text corpus.

url = 'http://mattmahoney.net/dc/'

def maybe_download(filename, expected_bytes):

"""Download a file if not present, and make sure it's the right size."""

if not os.path.exists(filename):

filename, _ = urlretrieve(url + filename, filename)

statinfo = os.stat(filename)

if statinfo.st_size == expected_bytes:

print('Found and verified %s' % filename)

else:

print(statinfo.st_size)

raise Exception(

'Failed to verify ' + filename + '. Can you get to it with a browser?')

return filename

filename = maybe_download('text8.zip', 31344016)To perform tasks, we need to load all the text to a memory in the forms of string.

def read_data(filename):

"""Extract the first file enclosed in a zip file as a list of words"""

with zipfile.ZipFile(filename) as f:

data = tf.compat.as_str(f.read(f.namelist()[0])).split()

return data

words = read_data(filename)

print('Data size %d' % len(words))The next part is to create a dictionary of most commonly used words, we will create50000 words of dictionary, ordered in descending with respect to the no. of occurrences.

vocabulary_size = 50000

def build_dataset(words):

count = [['UNK', -1]]

count.extend(collections.Counter(words).most_common(vocabulary_size - 1))

dictionary = dict()

for word, _ in count:

dictionary[word] = len(dictionary)

data = list()

unk_count = 0

for word in words:

if word in dictionary:

index = dictionary[word]

else:

index = 0 # dictionary['UNK']

unk_count = unk_count + 1

data.append(index)

count[0][1] = unk_count

reverse_dictionary = dict(zip(dictionary.values(), dictionary.keys()))

return data, count, dictionary, reverse_dictionary

data, count, dictionary, reverse_dictionary = build_dataset(words)

print('Most common words (+UNK)', count[:5])

print('Sample data', data[:10])

del words # Hint to reduce memory.

Following are the some sample data from dictionary. Since we have many words in thecorpus, we will make UNK (unknown) that will represent all the rarely used words.

Index, Word, No. of Occurrences

0, UNK, 418391

1, The, 1061396

2, Of, 593677

3, And, 416629

4, One, 411764One thing you need to know, to get the rank use the dictionary, to get the word use the reverse dictionary.

The next part is generate batch method is the main part, which predicts the context based on the particular word, we make the window around depending upon how much words to predict from single word.

Taking a subset from the original corpus.

Anarchism originated as a term of abuse first used against early working class radicals including the diggers of the English revolution

With batch_size=8, num_steps=1 and skip_window=1, we get;

Originated as a term of abuse first used

Now we will create a window that will move around the text, technically the buffer will add from one side and remove from the other since its a deque.

Span is calculated using the skip_window; in our case it is equal to 3. This is the size of the window which will move. After some iteration we will have the following sample.

Index, Word

0, of

1, abuse

2, firstNow abuse will predict the labels depending upon the num_step size, since its 1 it will predict only one label which will be either 0 or 2 not 1 because it cannot predict itself.

If you set the num_step to 2, then it will predict 0 and 2, we will avoid duplication.

data_index = 0

def generate_batch(batch_size, num_skips, skip_window):

global data_index

assert batch_size % num_skips == 0

assert num_skips <= 2 * skip_window

batch = np.ndarray(shape=(batch_size), dtype=np.int32)

labels = np.ndarray(shape=(batch_size, 1), dtype=np.int32)

span = 2 * skip_window + 1 # [ skip_window target skip_window ]

buffer = collections.deque(maxlen=span)

for _ in range(span):

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

for i in range(batch_size // num_skips):

target = skip_window # target label at the center of the buffer

targets_to_avoid = [ skip_window ]

for j in range(num_skips):

while target in targets_to_avoid:

target = random.randint(0, span - 1)

targets_to_avoid.append(target)

batch[i * num_skips + j] = buffer[skip_window]

labels[i * num_skips + j, 0] = buffer[target]

buffer.append(data[data_index])

data_index = (data_index + 1) % len(data)

return batch, labels

print('data:', [reverse_dictionary[di] for di in data[:8]])

for num_skips, skip_window in [(2, 1), (4, 2)]:

data_index = 0

batch, labels = generate_batch(batch_size=8, num_skips=num_skips, skip_window=skip_window)

print('\nwith num_skips = %d and skip_window = %d:' % (num_skips, skip_window))

print(' batch:', [reverse_dictionary[bi] for bi in batch])

print(' labels:', [reverse_dictionary[li] for li in labels.reshape(8)])Now its time to train the model on that text corpus and predict the words fromdictionary. I am not going to share the training code since its similar to all the previous one, but you can get the full code from github.

We will take 128 batch size and try to predict the context with respect to words, this process will repeat for the remaining batches with respect to the number of iteration.

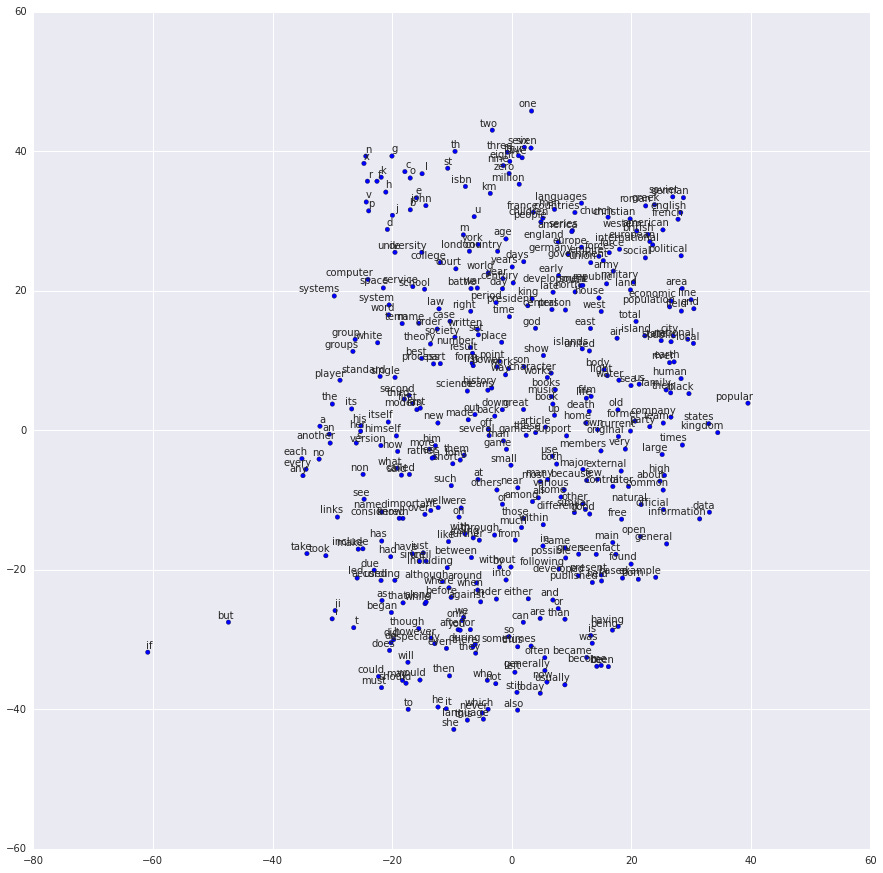

You will also see how loss and nearest words are changing with time.

The nearest words calculation might confuse you a little. The idea behind is that when the system learns the words around a particular word, it learns the context in which they are used. The vector created after training on a big corpus using thousands of windows would make more sense.

From the vector, the larger the values the more related to that particular word. For example, predicting the nearest words near its gives their, ours, yours, etc.

Both its and their are close to each other because they are used in the similar context. The values assigned to its and their are 1.00004 and 0.7417, respectively.

The values are interpreted as its is nearest to itself which we ignore, so the nearest is their since it has the highest value among all.

You may notice one thing in the code, we have multiplied the similarity values with minus, the reason behind is that we need all the values to be sorted in ascending order.

Download the full code from here.

After running this code, you will see the following graph showing the relation among the words.

Incase of any difficulties, I will recommend you to do a dry run. Also watch the below video which is taken from a Stanford Deep Learning Course. It will make several things clear apart from word2vec.

In the next tutorial, I will share the Continuous Bag of Words tensorflow implementation and will show how the difference in these two techniques impact the outcome.