Sequence to Sequence model for NLP

Sequence to Sequence model is one of the most effective model for Natural Language Processing tasks such as translation. There are very few quality papers available on this topic and today I will summarize a very effective paper titled as “Sequence to Sequence Learning with Neural Networks” written by the Google Research Team.

If you are interested in reading the whole paper then it can be found here. However, the details I am going to share will be enough to get the general idea of the paper.

Sequence to Sequence model is an effective way of using the Recurrent Neural Network architecture (Long Short Term Memory). The main idea behind this paper is to increase the efficiency of English to French translation.

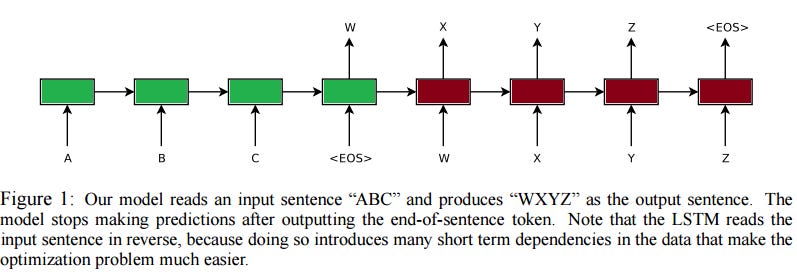

This paper represent two different LSTM cells, used for input and output. The purpose of these cells is to convert the variable length sentences into fixed sized vector representations. Sentences that are similar in meaning are close in vector representation.

In order to identify the end of sentence the inputs are marked by <EOS>. Each LSTM has used four layers making it a deep network which performs way better.

This image is taken from the paper

The purpose of LSTM is to find the conditional probability for outputs with respect to inputs. The first LSTM cell finds the vector representation of the given inputs which is then fed to the second LSTM as initial values to calculate the conditional probability.

One of the most important concept in this paper is reversing the inputs to increase the performance of the model. Also reversing makes it work better even for long sentences. The cost is greatly reduced using Stochastic Gradient Descent through this reversing concept. This technique is used only in the training part, not in validation or testing. For example if we want to input a sentence I am good and get its French translation, then according to this paper we would input Doog ma I <EOS>.

Sequence to Sequence can work in two ways, predicting one character at a time or one word at a time. Hence in order to map inputs and outputs, the alignments are known prior to the time.

The three main points to note from this paper are explicitly revealed.

Two Different LSTM used for inputs and outputs.

Deep LSTM with four layers.

Reversing the order of the input sentence.

Reversing the order would seem that now the starting characters are closer with their respective translated characters. However, this improves the performance because the average distance is now unchanged and through this it is easier to establish communication between the inputs and outputs using SGD.

A single input is mapped to several outputs and to get the best one we used a searching mechanism known as Beam Search. It simply searches from left to right and keeps only those probabilities that are highest among all, the size of the beam in this scenario can be 1 or 2, both works fine as per the author.

Some of the training details are as follows:

2 LSTM with 4 layers, 1000 cells and1000 dimensional word embeddings

160,000 words in input vocabulary

80,000 words in output vocabulary

In order to carry out the task efficiently, they implemented LSTM using C++ and performedthe task using 8 GPUs.

The experimental results section shows how greatly these techniques have worked even for the long sentences. The BLEU (bilingual evaluation understudy) score with different settings can be compared in the following table.

The visual representation can be seen in the paper.

Couldn't agree more, it's fascinating to consider how the fixed-sized vector representation consistently captures the full semantic complexity of diverse input sentence lenghts, which then optimaly informs the second LSTM for condtional probability.