Recurrent Neural Networks (LSTM) Tutorial

Recurrent Neural Networks are one of the most used ANN structure in text and speech learning problems. The purpose of RNN is to work well when the input is in sequence and varies in length, the speech and text are the examples of such input.

The simple Neural Network are dependent only on the current input, they are independent of the past. But the RNNs are here to solve the problems where we need to know the past in order to predict the future, as such the text.

In the previous word2vec tutorial we listed the nearest words using the two techniques the skip gram and continuous bag of words. But using RNN we can predict what the next word or letter will be.

One of the problems remain in the RNN that is it unable to remember the required words that will help in predicting the future words.

Lets clarify using an example, we need to predict the last word of the following text.

The birds are in the sky

So the RNN would work well because the previous words are enough to predict the last word, in other words, here we have enough context to predict. But what will happen if we have a following text.

I was born in China……… I speak fluent Chinese.

The RNN would fail to predict the last word because of the limitation of remembering the context. To predict Chinese it needs to know the country name China which was discussed in the start of the text and was forgotten by RNN.

Technically, this problem occur while doing back propagation, the gradient explodes or it vanishes. The former is solved by a concept called gradient clipping while the latter needs LSTM.

Long short term memory (LSTM) are used which are based on RNN architecture but a little modification make it work well in the above scenarios.

LSTM solved this issue by remembering only those words that help in predicting the future words efficiently.

Lets see how a single LSTM is implemented in TensorFlow. This is quite difficult as compared to previous codes. This model will predict character by character.

The starting steps are same as of previous word2vec version, downloading the text corpus and reading it into memory.

Setting the validation set size and printing a sample data.

valid_size = 1000

valid_text = text[:valid_size]

train_text = text[valid_size:]

train_size = len(train_text)

print(train_size, train_text[:64])

print(valid_size, valid_text[:64])Time to create a vocabulary for each letter that will be represented by its ascii. The following method converts from char to ascii and vice versa.

vocabulary_size = len(string.ascii_lowercase) + 1 # [a-z] + ' '

first_letter = ord(string.ascii_lowercase[0])

def char2id(char):

if char in string.ascii_lowercase:

return ord(char) - first_letter + 1

elif char == ' ':

return 0

else:

print('Unexpected character: %s' % char)

return 0

def id2char(dictid):

if dictid > 0:

return chr(dictid + first_letter - 1)

else:

return ' '

print(char2id('a'), char2id('z'), char2id(' '), char2id('ï'))

print(id2char(1), id2char(26), id2char(0))Here comes the main part, the BatchGenerator class generates the batches by iterating over the text corpus. This class contains two functions that generates an initial batch and then the remaining batches.

_next_batchmethod generates an initial batch with the current cursor position. For clarity do a dry run along. The batch is of [64, 27] size and 1.0 is assigned to the values iterating over a text corpus using the cursor and later the cursor is updated.

The end result would be having 64 1s in the batch of 64x27.

The next function is used to generate further batches, and assigning each batch to a list called batches. So we generate 10 more batches and assigned to that list.

batch_size=64

num_unrollings=10

class BatchGenerator(object):

def __init__(self, text, batch_size, num_unrollings):

self._text = text

self._text_size = len(text)

self._batch_size = batch_size

self._num_unrollings = num_unrollings

segment = self._text_size // batch_size

self._cursor = [ offset * segment for offset in range(batch_size)]

self._last_batch = self._next_batch()

def _next_batch(self):

batch = np.zeros(shape=(self._batch_size, vocabulary_size), dtype=np.float)

for b in range(self._batch_size):

batch[b, char2id(self._text[self._cursor[b]])] = 1.0

self._cursor[b] = (self._cursor[b] + 1) % self._text_size

return batch

def next(self):

batches = [self._last_batch]

for step in range(self._num_unrollings):

batches.append(self._next_batch())

self._last_batch = batches[-1]

return batchesThis method returns the characters that have the highest probability.

To print, we need to convert it into a string.

def batches2string(batches):

s = [''] * batches[0].shape[0]

for b in batches:

s = [''.join(x) for x in zip(s, characters(b))]

return sCallingthe class, its functions and printing the results.

train_batches = BatchGenerator(train_text, batch_size, num_unrollings)

valid_batches = BatchGenerator(valid_text, 1, 1)

print(batches2string(train_batches.next()))

print(batches2string(train_batches.next()))

print(batches2string(valid_batches.next()))

print(batches2string(valid_batches.next()))The following four functions are used in the training part. It is used in finding the training and validation data set perplexity.

def logprob(predictions, labels):

predictions[predictions < 1e-10] = 1e-10

return np.sum(np.multiply(labels, -np.log(predictions))) / labels.shape[0]This function will sample one element from the distribution. It will be calledwithin the other function mentioned below.

def sample_distribution(distribution):

r = random.uniform(0, 1)

s = 0

for i in range(len(distribution)):

s += distribution[i]

if s >= r:

return i

return len(distribution) – 1This function converts the probabilities into 1-hot encoding:

def sample(prediction):

p = np.zeros(shape=[1, vocabulary_size], dtype=np.float)

p[0, sample_distribution(prediction[0])] = 1.0

return pThe following generates a random vector of probabilities that are later converted in to characters.

def random_distribution():

b = np.random.uniform(0.0, 1.0, size=[1, vocabulary_size])

return b/np.sum(b, 1)[:,None]

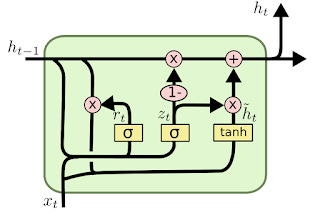

Now comes the main tensorflow graph part; it includes the following function. This is LSTM architecture, which returns an output and the updated state.

def lstm_cell(i, o, state):

input_gate = tf.sigmoid(tf.matmul(i, ix) + tf.matmul(o, im) + ib)

forget_gate = tf.sigmoid(tf.matmul(i, fx) + tf.matmul(o, fm) + fb)

update = tf.matmul(i, cx) + tf.matmul(o, cm) + cb

state = forget_gate * state + input_gate * tf.tanh(update)

output_gate = tf.sigmoid(tf.matmul(i, ox) + tf.matmul(o, om) + ob)

return output_gate * tf.tanh(state), stateThe lstm_cell function is the code work of the following image.

To learn more about LSTM, see this paper.

The remainder of the code has nothing different, it includes placeholder, optimizer and gradient. As discussed above, the problem of gradient exploding requires gradient clipping, you will see the following statement in the code.

gradients,_ = tf.clip_by_global_norm(gradients, 1.25)The training part is also similar with the previous tutorials, it feeds the batches into the training data and computes the loss and the perplexity over a mini batch and validation set by using the above functions.

By going through the full code and doing dry run, it would make you clear. Full code is available here.

In the next tutorial, I will share how we can use this technique with two characters at a time (bigram). Further will introduce Regularization technique known as Dropouts in LSTM.