Melbourne House Price Prediction using XGBoost

In the previous article we used XGBoost for classification problem on the titanic dataset, in this article we will do regression on Melbourne Housing Dataset using the Extreme Gradient Boosting from the Caret Package available in R. We will predict the prices of houses and will try to get the best accuracy by avoiding over fitting issues.

We have no information regarding the variables of the dataset but you can find out easily by searching on Google. Most of the variables are easy to understand.

Download and load Dataset

The first step is to download the dataset from Kaggle or Github. The housing dataset comes in variety of forms, you may find housing dataset having more than 70 variables, but this dataset is quite simple and analysis is performed for the sake of learning.

train <- read.csv('Aus_housing.csv', stringsAsFactors =FALSE)Install and Load Libraries

We will need to install many libraries and then load them into R.

#install and load required packages

install.packages(c("gridExtra","ggplot2","e1071", "caret", "doSNOW", "ipred", "xgboost", "dplyr"))

library(e1071)

library(caret)

library(doSNOW)

library(ipred)

library(xgboost)

library(dplyr)

library(ggplot2)

library(gridExtra)Clean Dataset

Before we analyze the data we need to clean the dataset. Cleaning process takes a lot of time, we have to remove duplicate records and missing value records. We can see by viewing the dataset that it contains many missing values, we can remove them.

The outcome variable Price contains lot of missing values we can remove them (We can also store them separately and predict once our model is ready). Similarly, we can see many attributes values missing in each rows, we can remove all of them with the following:

#removing missing records based on Price and Bedroom2

train <- train[!is.na(train$Price),]

train <- train[!is.na(train$Bedroom2),]You will still see many missing values like BuildingArea but they are now in only few columns we can impute them later.

Also, BuildingArea and LandSize contain many zero values, these are actually missing values we can replace them with NA.

# replacing 0 with NA

train$BuildingArea[train$BuildingArea == 0] <- NA

train$Landsize[train$Landsize == 0] <- NAData Analysis

Now comes the data analysis step where we will explore data, also known as EDA (Exploratory Data Analysis). We need to analyze every variable individually and with the outcome variable but here I will analyze only important variables.

The first step we will plot a histogram of the outcome variable just to know how the data is distributed.

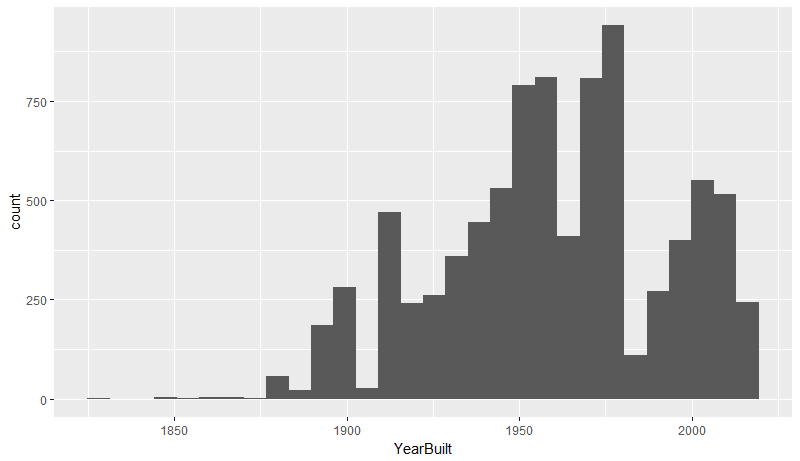

# For plotting continious individual plotsggplot(aes(Price),data=train)+geom_histogram() Similarly, seeing distribution of YearBuilt. This graph seems to show that the majority of houses are from the the mid 20th century.

ggplot(aes(YearBuilt),data=train)+ geom_histogram()Nowmultiple histograms to see distribution.

# Multiple Plots

a <- ggplot(aes(Rooms),data=train)+ geom_bar()

b <- ggplot(aes(Bedroom2),data=subset(train, Bedroom2 < 20))+

geom_bar()+ scale_x_discrete(breaks=seq(0,8,2), limits= c(0:8))

c <- ggplot(aes(Bathroom),data=train)+ geom_bar()

d <- ggplot(aes(Car),data=train)+ geom_bar()

grid.arrange(a, b, c, d, ncol=2, nrow=2)PlottingDate vs Price, the plot does not give enough information, the Price seems to be independent of the Date. It would have worked great if the rangewas atleast five years.

# Date plotggplot(aes(as.Date(Date,'%d/%m/%Y'),Price), data= train)+ geom_point()Plotting multiple graphs for Landsize vs Price and BuildingArea vs Price with respect to Type and Method. It will tell how the Price ischanging with the change in other variables.

# Multi plot

w <- ggplot(aes(Landsize,Price), data= train)+

geom_point(alpha=1/3, aes(color= Type))+

coord_cartesian(xlim = 0:10000)

x <- ggplot(aes(Landsize,Price), data= train)+

geom_point(alpha=1/3, aes(color= Method))+

coord_cartesian(xlim = 0:10000)

y <- ggplot(aes(BuildingArea,Price), data= train)+

geom_point(alpha=1/5, aes(color= Type))+

coord_cartesian(xlim = 0:1000, ylim = 0:5e+06)

z <- ggplot(aes(BuildingArea,Price), data= train)+

geom_point(alpha=1/5, aes(color= Method))+

coord_cartesian(xlim = 0:1000, ylim = 0:5e+06)

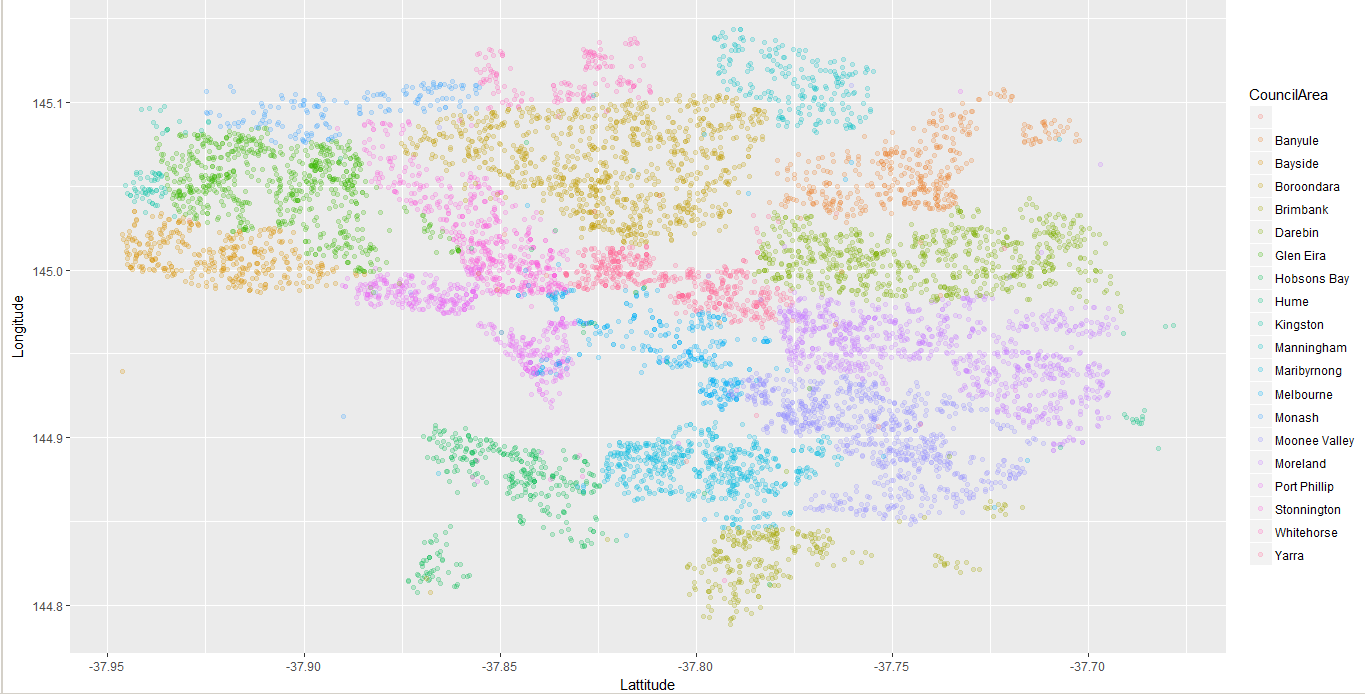

grid.arrange(w, x, y, z, ncol=2, nrow=2)If we look at the first column, the second plot (`BuildingArea vs Price vs Type`) is far more meaningful than the first one. The BuildingArea and Type both are showing how effective they could be in predicting the Price. PlottingLattitude vs Longitude vs CouncilArea, to see how Council Areas are spread in the form of map.

ggplot(aes(Lattitude,Longitude), data= train)+ geom_point(alpha=1/5,aes(color= CouncilArea))Similarly, you can create your own visualization and interpret them in a different way. These visualization will be helpful in feature selection and dimensionality reduction. I would recommend you to dive deep and do more EDA.

Feature Selection

Now its time to reduce the number of features, in other words reduce the dimensions. There are several irrelevant features that we can directly filter out and then use a feature subset selection approach to find one since the number of variables are not that high.

Variables like Suburb, Address and SellerG are categorical and have many categories, including this would not improve the model and further the model will take more time in training. Postcode is also not relevant because that is more like a categorical variable. (Visualize them for better understanding)

I did not get the purpose of Distance variable in the dataset, even their is no such meaningful relation between Distance and Price, so I neglect it out. (Dig Deep!)

Landsize and Date seem to be a common feature in predicting the prices, but in this case its not. Even after trying out with them, the model seems to start overfitting. (See the Visualization)

Now selecting the features, from the visualization presented above and some commonsense, we can come up with the following features:

# Feature selection

train <- subset(train, select = c(Rooms, Bedroom2, Bathroom, Car, Type,

Method, BuildingArea, YearBuilt, CouncilArea,

Lattitude, Longitude, Price))Impute Missing Values

In order to impute missing values we need to create dummy variables but before that if we have any non-missing categorical variable that has many categories we can remove them and add them back once all missing values are computed.

In our case we have CouncilArea, so storing it in a temporary dataframe and then removing it. This will help us to impute the missing values quickly.

# For back up of values

temp = data.frame(train$CouncilArea)

train <- subset(train, select = -c(CouncilArea))Now using Caret package to create dummy variables, then impute them and finally replace the imputed variables in the original dataset.

# Transform all feature to dummy variables.

dummy.vars <- dummyVars(~ ., data = train[, -1])

train.dummy <- predict(dummy.vars, train[, -1])

View(train.dummy)

# Now, impute!

pre.process <- preProcess(train.dummy, method = "bagImpute")

imputed.data <- predict(pre.process, train.dummy)

View(imputed.data)

#Assignign imputed values to original dataset

train$BuildingArea <- imputed.data[, 12]

train$YearBuilt <- imputed.data[, 13]

train$Lattitude <- imputed.data[, 14]

train$Longitude <- imputed.data[, 15]

#Adding back the CouncilArea

train$CouncilArea <- temp$train.CouncilAreaSplit Data and Train Model

The next few steps are pretty easy, all you have to do is to setup stuff for the model, no need for deep explanation. The code is similar to what we did in the Titanic Dataset.

# Using 10-fold cross validation repeated 3 times

# Using a grid search for optimal model hyperparamter values.

train.control <- trainControl(method = "repeatedcv",

number = 10,

repeats = 3,

search = "grid")

# Leverage a grid search of hyperparameters for xgboost

tune.grid <- expand.grid(eta = c(0.05, 0.075, 0.1),

nrounds = c(50, 75, 100),

max_depth = 6:8,

min_child_weight = c(2.0, 2.25, 2.5),

colsample_bytree = c(0.3, 0.4, 0.5),

gamma = 0,

subsample = 1)

# For Parallel Processing use doSNOW

# Tune the number of thread or core based on your PC

cl <- makeCluster(4, type = "SOCK")

# Register cluster so that caret will know to train in parallel.

registerDoSNOW(cl)

caret.cv <- train(Price ~ .,

data = housing.train,

method = "xgbTree",

tuneGrid = tune.grid,

trControl = train.control)

stopCluster(cl)

# Examine caret's processing results

caret.cvThe last line above will show how the model performed based on different combination of hyperparameters, and will pick the best one.

Test and Evaluate Model

The model is ready, we need to test it on the test dataset which we obtained during the splitting process. For continuous output variable, we can evaluate the model using Root Mean Squared Error, R-Squared, Minimum and Maximum Accuracy and Mean Absolute Percentage Error (MAPE).

# Making Predictions

preds <- predict(caret.cv, housing.test)

#Evaluation using RMSE, R-Squared

postResample(preds,housing.test$Price)

# Evaluation using min max accuracy and MAPE

actuals_preds <- data.frame(cbind(actuals=housing.test$Price, predicteds=preds))

head(actuals_preds)

min_max_accuracy <- mean(apply(actuals_preds, 1, min) / apply(actuals_preds, 1, max))

mape <- mean(abs((actuals_preds$predicteds - actuals_preds$actuals))/actuals_preds$actuals)Similarly, we can reuse the above code for training dataset so that we can see how well our model has predicted on the test dataset. Also, we can see in different settings of the features whether there is an overfitting or not. If more variables are added in the feature list, then you would see an increase in training accuracy but slight decrease in test accuracy, meaning that the model is start to overfit.

Output

Training Dataset

Testing Dataset

Ideal Values

RMSE

1.523547e+05

2.730161e+05

0

R-Squared

9.474203e-01

8.274691e-01

1

M_M_Accuracy

0.9024803

0.8723883

1

MAPE

0.0977454

0.1545473

0

The M_M_Accuracy is quite well in this scenario, the reason for that is the Price contains big numbers so a 10,000 difference in prediction would show a big decrease in the accuracy, but in reality it will not matter that much when house price is 1,000,000.

`RMSE` in our case is quite high considering the exponential term, the reason again is the big numbers in Price. So neglect the exponential, then it becomes quite good and meaningful.

To improve the accuracy, feature engineering is now required. I created few features from Address and YearBuilt variables but that didn’t help, will update this article in case if I come up with better result.

You can find the full R code at the Github Repo.

For any question, feedback or suggestion, feel free to comment below.