Introducing Convolutional Neural Networks

Inthis tutorial, I will introduce Convolutional Neural Network that arecomputationally expensive but much accurate than the simple Neural Network. The previous python code will be converted into Convolutional Neural Network.

In Convolutional Neural Network same inputs share the weights. It has a property called statistical invariance in which the inputs are independent of time and space. For example, a same image of cat would remain same irrespective of theposition (bottom left or top right) and time.

Below I have attached a video from the Udacity course that I did;

It is basically the collection of several tiny neural networks that run on an image in the form of patches or kernels, and its amount of how further it moves is defined using a property known as stride.

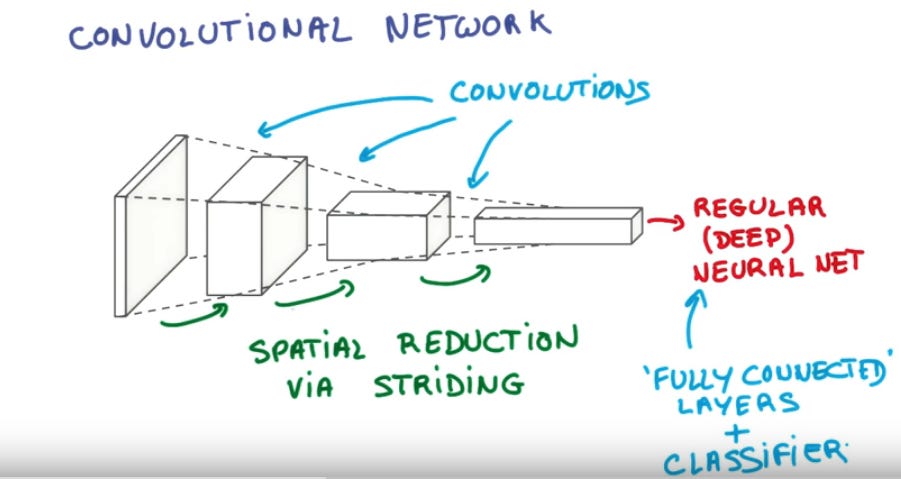

The CNN input and output both are in 3 dimensions but there is a huge difference which you can observe in the below image. It basically creates a pyramid.

The output depends on several factors including the strides which causes spatial reduction and gives a pyramid shape to the CNN structure. Setting stride value to 1 would not make the pyramid as above.

From the code perspective, there is not that much change.

Reformatting needs to modified, because now we have to define 3D image, the third is the channel which can be RGB or Gray scale.

num_channels = 1 # grayscale

Patch size and depth need to be defined.

patch_size = 5

depth = 16

All training, valid and test dataset shape has been modified.

tf_train_dataset= tf.placeholder( tf.float32, shape=(batch_size, image_size,image_size, num_channels))

Weights also changed to:

layer1_weights= tf.Variable(tf.truncated_normal([patch_size, patch_size, num_channels,depth], stddev=0.1))In the model function, convolutional function needs to be introduced. The [1, 2 ,2, 1] in the above code are the stride values, since we have four dimensions, batch size, image size (width), image size (height), no of channels (depth). So we have used 2x2 striding meaning it will move by 2 in height and width.

Conv= tf.nn.conv2d(data, layer1_weights, [1, 2, 2, 1], padding='SAME')In the code you will see a ReLu function again and might think why it is here, this is to bring non linearity.

Get CNN multi layers python code from Github. This code will not have any kind of regularization or pooling or learning decay, it is the conversion of basic neural network model that we learned in the start but it has multi layers. After running the downloaded code, you will see how the accuracyis increased using CNN.