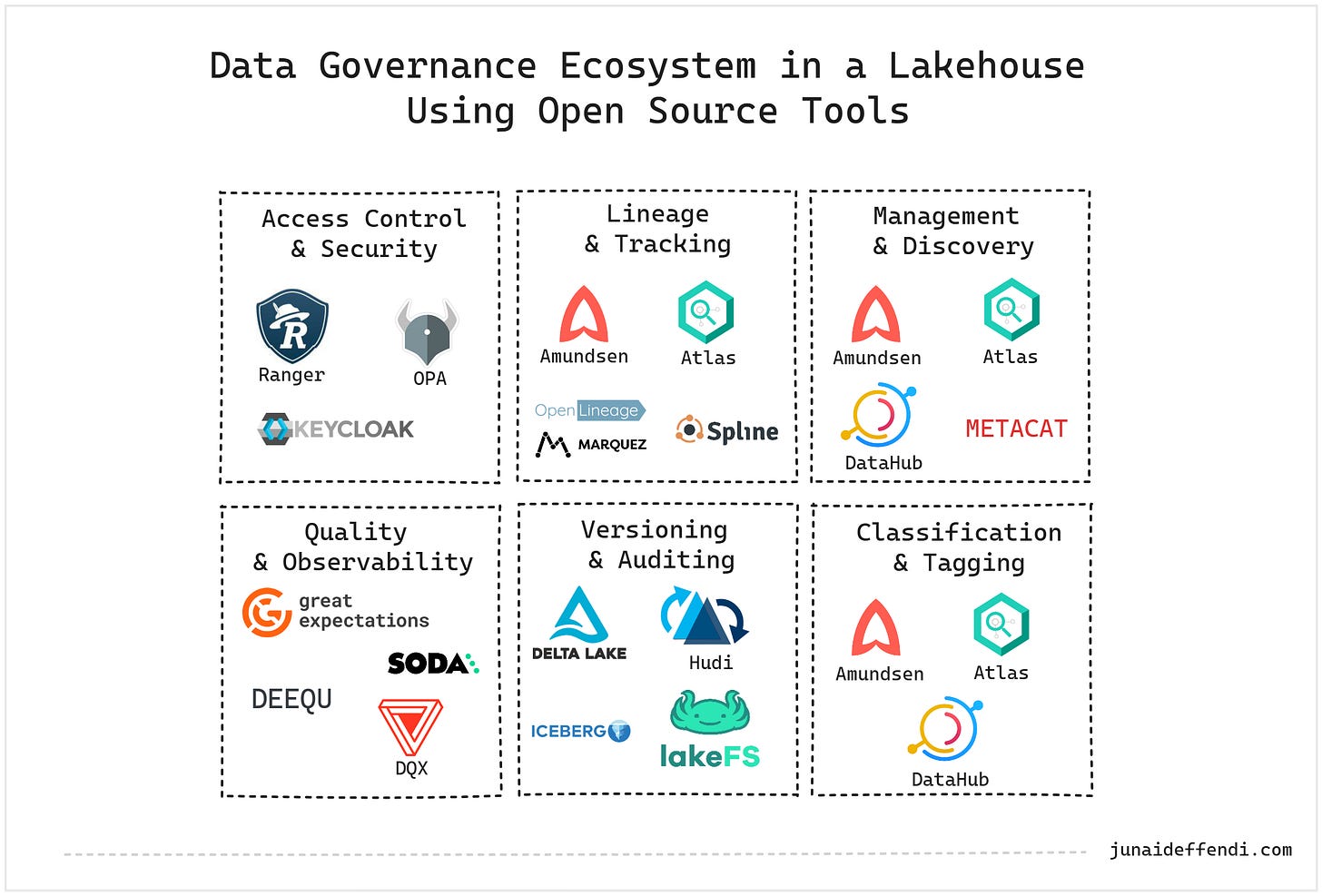

Data Governance in Lakehouse Using Open Source Tools

Discover how to build a complete data governance ecosystem in a Lakehouse architecture using leading open-source tools. Explore access control, metadata management, lineage, quality and more.

As organizations adopt the Lakehouse architecture which blends the flexibility of data lakes with the reliability of data warehouses, the need for robust data governance becomes critical. But good governance doesn’t have to mean expensive vendor tools. With a smart selection of open-source tools, you can enforce policies, ensure data quality, and maintain compliance across your entire data platform.

⭐ While these tools are not limited to Lakehouses and can apply to lakes, warehouses, and hybrid architectures, they are especially critical for Lakehouses where governance capabilities must often be assembled independently.

The goal of this article is to provide a high-level overview of Lakehouse governance using open-source tools. In future articles, I will dive deeper into these technologies.

Access Control & Security

Goal: Control who can access what data and under what conditions.

Apache Ranger: Centralized policy management for fine-grained access control at various levels (database, table, column, row) in Hive Metastore.

Ranger can work with Lakehouse through Hive Metastore.

Works well with Apache Atlas.

Keycloak: Not specifically for data, but for data services and applications. It integrates with tools like Trino, Airflow, and Superset to manage access to UIs and APIs through SSO and role-based access control.

Open Policy Agent (OPA): General-purpose policy engine for access control enforcement, especially when integrated with metadata platforms like DataHub.

Data Lineage & Tracking

Goal: Understand how data moves, transforms, and is consumed across systems.

Amundsen: Basic lineage tracking through metadata relationships and a user-friendly UI, emphasizing search rather than detailed lineage.

Apache Atlas: Rich lineage capabilities, tracking data flow across systems like Hive, HDFS, Kafka, Lakehouse (through Hive Metastore) providing insights into data transformations and processing workflows.

OpenLineage + Marquez: OpenLineage defines lineage metadata for pipelines; Marquez provides metadata service with UI for search and graph-based exploration.

OpenLineage is also used by Amundsen and DataHub under the hood.

Spline: Captures runtime data lineage from Apache Spark applications with minimal code changes.

Metadata Management & Discovery

Goal: Make data assets easily discoverable, understandable, and governed.

Amundsen: Metadata and search engine with user-friendly UI, showing dataset schemas and ownership. Built on Neo4j and Elasticsearch.

Apache Atlas: Metadata catalog with support for taxonomies, classifications, and integration with security tools.

DataHub: Comprehensive metadata platform supporting powerful metadata discovery, faceted search, schema versioning, data ownership, and impact analysis.

Metacat: Metadata and dataset catalog from Netflix, supporting multiple storage systems and integration with Hive and Presto.

Data Quality & Observability

Goal: Ensure data meets business expectations through validation and monitoring.

Great Expectations: Framework for defining "expectations" on datasets (e.g., no nulls, unique keys). Integrates into pipelines for runtime validations.

Soda Core: CLI-based tool for rule-based data profiling and monitoring, detecting freshness, duplicates, and other issues.

Deequ: Library for setting constraints on datasets (completeness, uniqueness) and working at scale with Spark Dataframes.

DQX: Framework for orchestrating data quality checks across engines, integrated with Spark, Delta Lake, and Lakehouse ecosystems.

Data Versioning & Auditing

Goal: Enable reproducibility, rollback, and audit trails for datasets.

Delta Lake: Provides ACID-compliant versioning, intuitive time travel by version or timestamp, rollback to previous states, and full audit logs via a transaction log.

Apache Hudi: Enables versioned data with commit timelines, supports time travel through instant queries, allows rollback of failed writes, and maintains an auditable history.

Apache Iceberg: Tracks data through immutable snapshots, supports time travel and rollback via snapshot IDs, and logs detailed metadata for auditing.

LakeFS: Git-like version control for data lakes, enabling branching, committing, and merging of data.

Data Classification & Tagging

Goal: Categorize and protect sensitive or regulated datasets appropriately.

Amundsen: Offers lightweight tagging and ownership attribution, helping teams document and search datasets, though with limited support for hierarchical glossaries or complex policies.

Apache Atlas: Provides advanced data classification with custom tags, business glossaries, and automated lineage-based tag propagation for enterprise-grade metadata governance.

Commonly used with Apache Ranger.

DataHub: Supports dynamic classification through tags and glossary terms, with policy enforcement and access control via seamless integration with Open Policy Agent (OPA).

Users:

Some of these tools are used by large companies on a large scale.

⭐ Unity Catalog: The current open-source release offers basic features, but once it incorporates full governance capabilities, it will become a powerful, modern tool with all the mentioned functionalities.

Final Thoughts

Governance doesn’t have to be expensive or complex. By stitching together the right open-source tools, you can build a highly governed, secure, and observable Lakehouse architecture without locking into proprietary platforms.

Next Steps for Readers

If you're interested in learning more, I encourage you to follow future deep dives.

If you are looking to adopt, consider the following:

Identify governance gaps in your current architecture.

Pilot lightweight tools (e.g., Soda Core, Great Expectations) before scaling.

Gradually build a centralized metadata and lineage system.

Automate access controls and classification tagging as your data grows.

💬 Let me know in the comments if I missed something.

Might want to take a look at Egeria (https://egeria-project.org) which is a very comprehensive open source project one the Linux Foundation. Egeria supports distributed heterogeneous federation of a wide variety of information as well as active management and governance.